Existing product managers are desperate to understand what AI Product Management is. And I don’t blame them.

AI PM has changed the job market completely.

There are almost 700% more openings than last year. The role pays on average 50% more than non-AI PM jobs. But the problem is that nobody, not even the employers, really knows what AI PMs actually do.

I have been an AI PM for over two years (and a PM for 13). I have seen the confusion firsthand. I am writing this guide to alleviate some of that confusion.

Let’s go!

Why Is the AI PM So Confusing?

Most AI PM roles are super new. Specifically, the ones that are using LLMs and conversational experiences to build new solutions.

We already know the expectations from traditional product management. That is to understand customers, identify their pains, work with engineers and designers to build solutions, and launch products.

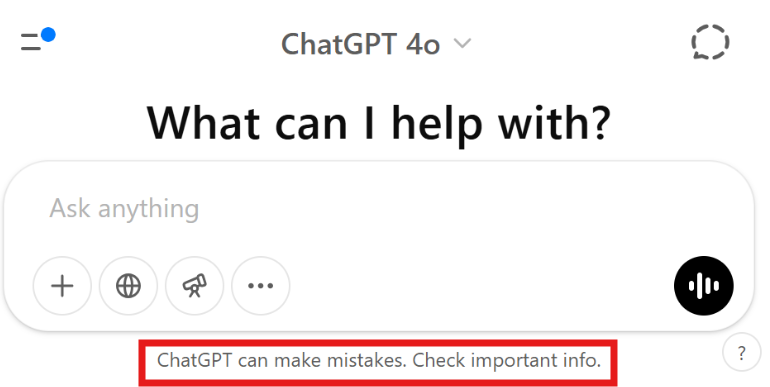

But AI product management is different. AI products don’t work like regular non-AI products. Non-AI products do what you tell them to do, every time. AI products make predictions.

And that leads to questions that traditional PMs don’t have to answer:

- How do you write requirements for something that can behave in unpredictable ways?

- How do you explain to users why your product recommended something offensive?

- What do you do when your product gives different answers to the same question?

AI PMs are responsible for answering these questions.

At the least, they are responsible for thinking about them.

What Does an AI PM Actually Do?

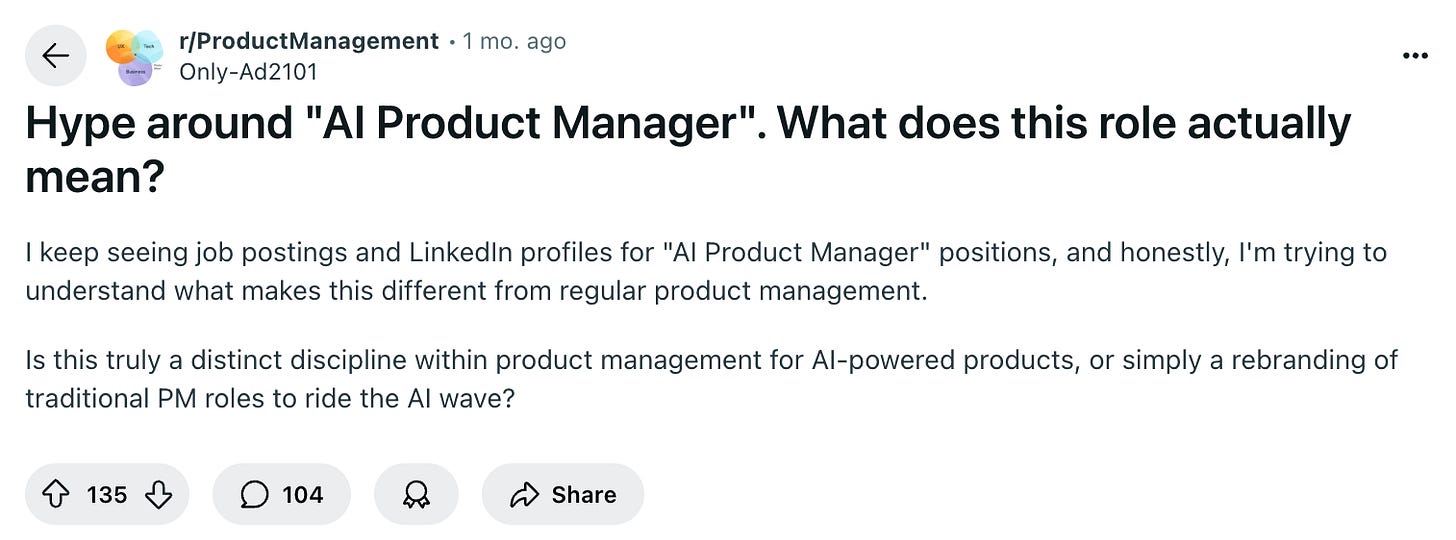

Here is my simple (work in progress) model of what AI PMs do.

The best way to define a role is to think of all the questions that the person will be required to answer. That is how I also think of traditional product management. (Detailed here)

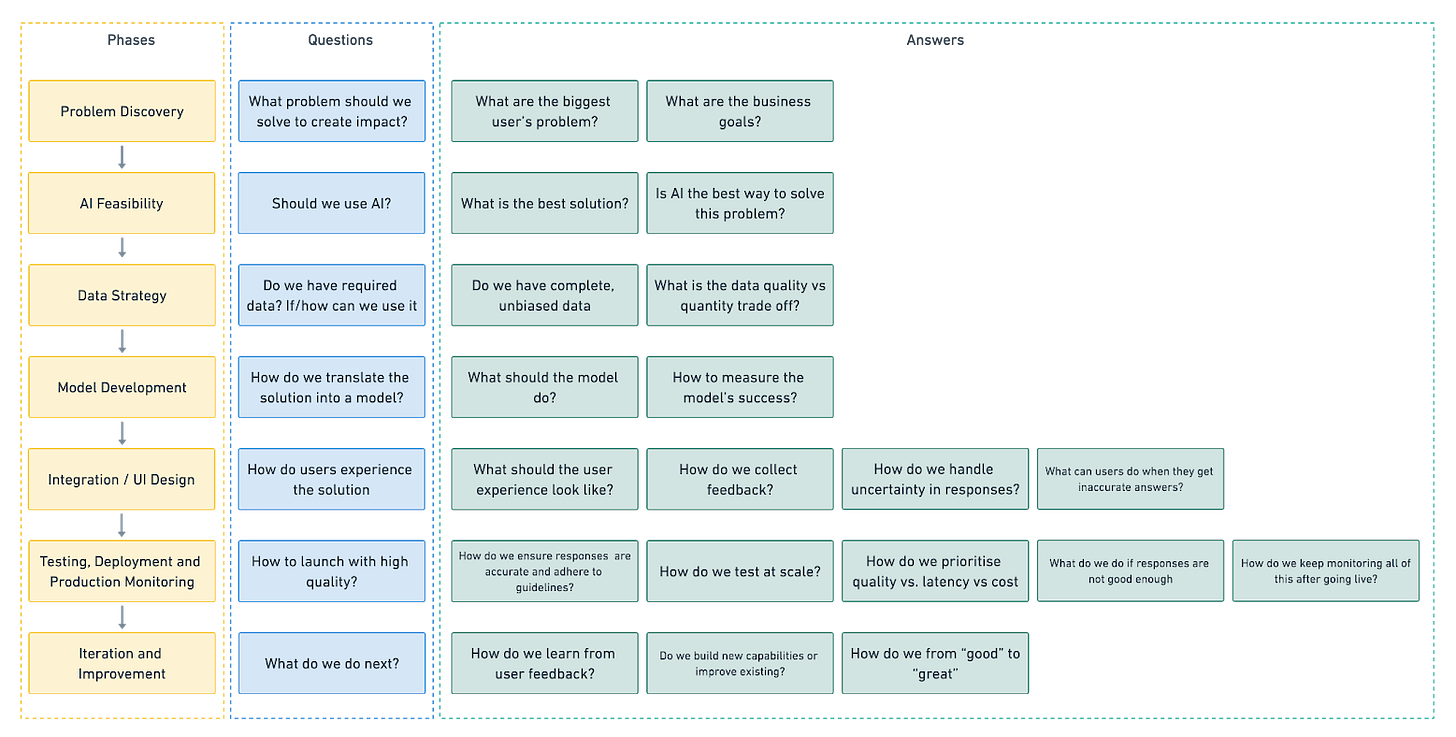

First things first, even AI PMs follow the same product lifecycle as all other PMs.

Here’s a quick refresher:

- Planning: Decide what to do, what not to do.

- Alignment: Know the right people, and make them agree to the plan

- Execution: Translate the plan into a product

- Measure: Determine if your product is successful. Why or why not.

Now, let’s understand all the questions AI PMs have to answer:

Questions AI Product Managers Need To Answer

Question #1: What Problem Should We Solve?

The first job of every PM is to discover the most painful user problem that will also create business impact.

If you select the wrong problem, everyone’s effort will go to waste. You will either build something that no one uses or something that creates zero business value.

I won’t get into more details here. This step is the same as it is for non-AI PMs.

Question #2: Should We Use AI to Solve It?

Once you have identified the right problems to solve, you need to assess whether you really need AI to solve them.

This sounds simple. But it’s not. I can tell you from experience: most problems don’t need AI. Simple rule-based systems often work better, cost less, and create more impact. So let’s understand…

When should we use AI to build products?

There is no perfect answer to this question.

But here are a few guiding principles that will help you reach the right answer.

AI is a good fit when the solution depends on recognising patterns, not instructions.

In other words, when the problem involves judgment, context, or prediction, AI can help. If the problem can be written as “If X happens, do Y,” you probably don’t need AI.

But if the answer changes with time, data, or behavior, AI becomes useful. Here are five conditions where AI usually makes sense and what they look like in practice.

Condition 1. When Rules Break Too Often

AI helps when the logic behind decisions keeps changing quickly.

For example, to detect payment fraud, you can begin with simple rules like “flag all transactions above $10,000.” That might work for a short while. But fraudsters adapt.

They use smaller amounts, new methods, or different time patterns. The rules quickly become outdated.

An AI model will work better in this case. Because it can learn from changing behaviors, spot new patterns, and automatically adapt to them (instead of you rewriting the logic every week).

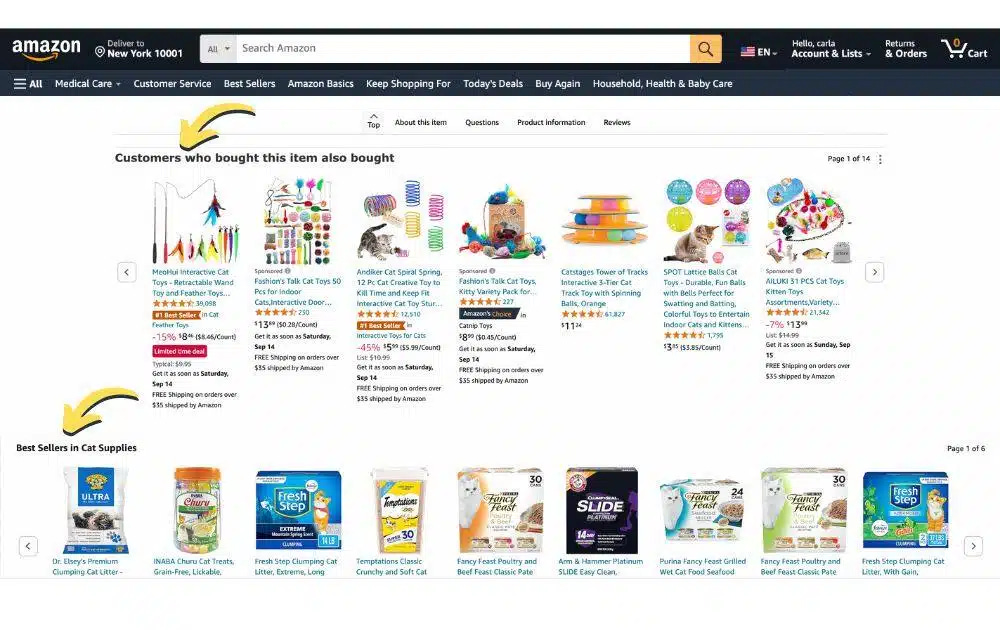

Condition 2. When Data Has Hidden Patterns

AI is powerful when the needed information is hidden behind large volumes of data.

For example, think of how Spotify recommends songs. You could make a simple rule like “if someone likes Coldplay, show them Imagine Dragons.”

But that logic misses all the other signals: what time do users listen, what songs they skip, what mood they are in. These patterns are too complex for human-created rules to find.

However, AI can find and learn from them automatically. If your product’s success depends on recognizing patterns that aren’t obvious or humanly describable, AI is the right tool.

3. When the Environment Keeps Changing

Some products work in fast-moving environments where things change rapidly. Search engines, ad platforms, and chatbots are perfect examples.

What people search for, talk about, or click on changes every hour.

A rule-based system would require constant manual updates. AI, however, can adjust automatically as it learns from new data. When your product must keep up with a world that changes daily, AI’s ability to adapt is a major advantage.

4. When Every User Needs a Unique Experience

AI also makes sense when each user expects a personalized experience. Netflix is a great example. It doesn’t show the same homepage to every user.

The sequence of movies, the images, and even the headlines are personalized based on your viewing history. This kind of personalization can’t be done efficiently with rules, because the number of possible user combinations is too large.

If your goal is to personalise the experience at an individual user level, AI is a good fit.

5. When Success Can Be Measured Clearly

Finally, AI only works when “better” can be defined in measurable terms. A machine learning (ML) model keeps learning and improving. So if you can’t quantify improvement, it has nothing to learn from.

For instance, Spotify can measure success by tracking how often users listen to the recommendations.

That gives AI the learning signal it needs. But if the goal is vague, like “make Spotify listeners happier,” the model can’t improve because it doesn’t know what outcome to aim for.

AI needs measurable results to guide its learning.

Question #3: Do We Have the Required Data to Build an AI Product? What is the Best Way to Use It?

AI is only as good as its data. Once an AI PM determines that a problem is suitable for machine learning, they enter what many consider the most critical phase: data strategy.

AI PMs must design vast data collection strategies that will provide the foundation for successful model training. They

- Partner with legal experts to ensure privacy rules are followed

- Work with engineers to set up how data flows

- Create rules for managing data that will continue to work as the product gets bigger

The data collection phase often requires AI PMs to make complex trade-offs between data quality and data quantity.

They might discover that getting perfectly organized data, with every piece of information clearly categorized and tagged (which is required to train ML models), would take six months and cost $100,000.

On the other hand, getting data that’s good enough to be useful, even if not perfectly organized, could happen in six weeks for $20,000.

These decisions require balancing technical requirements with business constraints while maintaining ethical standards around user privacy and consent.

AI PMs also establish the frameworks for ongoing data quality monitoring during this phase.

They create processes for detecting when incoming data starts to differ from training data, set up alerts for data quality issues, and design systems for continuously improving data collection based on model performance feedback.

This infrastructure becomes crucial later when models are deployed and need constant monitoring.

Question #4: How Do We Translate Data Into An ML Model That The Product Can Use?

Once the data is ready, the next step is to turn it into something the product can actually use, a working feature that users see and benefit from.

This is where AI PMs work closely with engineers and designers to bring the model into the product. The goal is to make the model useful, reliable, and easy to trust.

The user shouldn’t need to think about the AI or how it works.

They should just feel like the product understands them better.

AI PMs decide how the model shows up inside the product. Will it recommend something? Predict something? Summarize something? Or give a score?

Each choice affects the design. A recommendation might show confidence (“80% match”), a prediction might need context (“Based on your last 3 orders…”), and a summary might need an edit button for users to fix mistakes.

Because AI doesn’t behave the same way every time, PMs also design ways to handle errors and uncertainty.

What happens if the model is wrong? What should the product do when it’s not sure? Good AI PMs plan for this early, adding fallbacks, showing confidence levels, and giving users simple ways to correct or give feedback.

These feedback loops are critical. Every user action (a click, a correction, or a “thumbs up”) helps the model learn and improve over time.

AI PMs ensure these signals are captured safely and used to make the product better with real-world use.

Sometimes users want to know why the AI made a certain choice (“Because you liked…”). Other times, too much detail just slows them down.

The PM decides when to explain, when to simplify, and how to make users feel in control. Finally, the AI PM looks at how the new feature changes behavior.

Will users start relying too much on it? Could it create new risks or confusion? If so, the PM adds safeguards like clear boundaries, human review, or a simple “turn off” option.

This phase isn’t just about model accuracy. It’s about how people experience the model, making sure every interaction feels natural, useful, and trustworthy.

Liking this post? Get the next one in your inbox!

Question #5: How Do Users Experience The Product?

Once the model works, the next challenge is turning it into a real user experience. This is where solution design, integration, and UI design come together.

At this stage, AI PMs define what the experience looks like, how it behaves, how much control the user has, how to gain the user’s trust, and feedback mechanisms to know what makes the user unhappy.

The answers to these questions will vary a lot based on the use case.

A customer service chatbot needs access to a company’s internal data (often through RAG) and must sound reliable and human. It might be important to focus on showing supporting evidence while responding to the customer’s query.

A song recommendation system needs to surface options instantly, without explaining itself. A travel itinerary planner must combine user preferences, time, and location data into clear, editable suggestions.

Each product type has different expectations, risks, and data needs, and the PM’s job is to design around them. The goal is to make AI feel natural.

When it works well, users feel like the product understands them better.

Question #6: How Do We Build And Launch With High Quality?

Once the data, model, and design are ready, it’s time to build and test the solution.

This is where quality becomes everything.

AI products can’t be trusted until they have been tested, not just for whether they “work,” but for how well they handle thousands of unpredictable real-world situations.

That’s what separates a prototype from a reliable product.

1. Define What Quality Means

Before testing anything, the AI PM defines what “good” looks like. Quality can’t be defined universally for all products and use cases. It will be different for every case.

For a customer support chatbot, quality might mean:

- Accuracy: Did it answer correctly?

- Safety: Did it avoid making up or leaking information?

- Tone: Did it sound human and respectful?

For a recommendation system, quality might mean:

- Relevance: Are users engaging with suggestions?

- Diversity: Is it showing enough variety?

- Speed: Do results load instantly?

This step sets a clear bar for what counts as “ready.”

2. Build Evals (Evaluation Tests)

Traditional software can be tested with fixed inputs and outputs.

If A happens, expect B. AI doesn’t work like that.

Instead, it gives different answers depending on the data, the prompt, and even small variations in context. That’s why AI PMs use evals: structured tests that measure how the model performs across a range of realistic examples.

Think of evals as exam papers for the model.

Each question checks a different skill, and the goal is to see how often it behaves as expected.

Examples:

- For a chatbot, test 500 real customer questions. Review its answers for multiple dimensions such as correctness, clarity, and tone.

- For a summarization tool, test 100 sample articles and compare outputs to human-written summaries using automated metrics like BLEU.

- For a spam filter, run thousands of labeled emails and measure precision (how many flagged were truly spam) and recall (how many spam emails it caught).

There are three common kinds of evals:

- Automated evals: Run by scripts that check numeric metrics like accuracy, latency, or similarity. They are fast and scalable.

- Human evals: Done by reviewers who score outputs for quality, tone, or relevance, often using rubrics (like rating each response 1–5).

- Hybrid evals: Combine both. For example, using automation to filter outputs, then humans to judge borderline cases.

Good AI PMs mix all three. Automated evals catch large issues early, human evals capture nuance, and hybrid systems keep the process efficient. The point is to understand where it fails, how often, and why, not to perfect the model.

3. Do End-to-End Testing

Once the model passes evals, it’s connected to the full product. This stage checks how the model performs when real data, user interfaces, and infrastructure all come together.

For example:

- A chatbot might work fine alone, but break when linked to a company’s knowledge base.

- A recommendation system might deliver great suggestions, but it can slow down the page because of heavy API calls.

These integration tests help catch those real-world issues early.

4. Run Controlled Rollouts

After testing, the team launches to a small audience, internal users, or a limited % of customers. This validates that the model behaves correctly in live conditions.

AI PMs track early metrics, such as user satisfaction, error rates, and engagement.

If quality holds, they expand the rollout gradually. If performance drops sharply, it’s a sign that real user data is different from training data and the model needs tuning before full launch.

5. Launch Only When Quality Is Proven

A good AI PM treats launch as a quality gate, not a deadline. If the product doesn’t meet its defined benchmarks, it doesn’t go out. Because once a weak model reaches users, fixing trust is far harder than fixing code.

High-quality AI products come from discipline: clear goals, measurable tests, structured evals, and patient iteration.

Question #7: What Do We Do Next?

Once an AI product is launched, the work doesn’t stop. In fact, this is where a new cycle begins. Unlike traditional software, an AI product is never finished.

It learns from data, adapts to changes, and sometimes even behaves differently as the world around it evolves. This means there is always room for improvement.

Now, that is a big challenge because it’s hard to know when to stop.

Every time you make the model better in one way, you might make it worse in another. For example, tuning it for more creativity might reduce accuracy.

Making it more cautious might slow down responses. AI PMs must constantly decide what “better” means for their product at a given time, and when improvement brings more risk than reward.

The most reliable way to guide these decisions is through feedback. Feedback shows where the product is working and where it isn’t. AI PMs collect two kinds of feedback: from users and from the system itself.

User feedback helps reveal how people actually experience the product. It tells you where users feel misunderstood, where they lose trust, or where the product surprises them in the wrong way.

A chatbot, for instance, might give technically correct answers but still sound rude or cold. That insight doesn’t come from data alone. It comes from listening to users.

PMs gather this feedback through surveys, ratings, in-product prompts, or customer support data.

System feedback comes from the model’s own behavior.

AI PMs look at performance metrics, logs, and error reports to identify patterns where the model struggles. Maybe it performs worse for a certain group of users, or fails on a specific type of input.

Each of these insights becomes a clue for the next improvement, sometimes by adding new data, retraining the model, or adjusting prompts and features.

The goal isn’t to chase perfection, because AI will never be perfect. The goal is to make meaningful, measurable progress, one iteration at a time.

In a Nutshell

AI Product Management isn’t a buzzword anymore.

It’s the next evolution of product management. The role builds on traditional PM skills but adds new demands: data fluency, ethical awareness, and comfort with uncertainty.

The confusion around AI PM is natural. The field is new, fast-moving, and looks different in every company.

If you want to become an AI PM, start by building data literacy, learning how ML models work, and why LLMs hallucinate. As AI becomes core to more products, demand for PMs who can manage these systems will only grow.

In the next post, we will look at what skills help PMs excel as AI PMs.